Learnings from building my own home server ⚓

18 Dec 2017Learnings

I forgot to do this on the last blog post, so here is the list:

- archlinux has official packages for intel-microcode-updates.

- wireguard is almost there. I’m running openvpn for now, waiting for the stable release.

- While

traefikis great, I’m concerned about the security model it has for connecting to Docker (uses the docker unix socket over a docker mounted volume, which gives it root access on the host). Scary stuff. - Docker Labels are a great signalling mechanism. Update: After seeing multiple bugs with how

traefikuses docker labels, they have limited use-cases but work great in those. Don’t try to over-architect them for all your metadata. - Terraform still needs a lot of work on their docker provider. A lot of updates destroy containers, which should be applied without needing a destroy.

- I can’t proxy gitea’s SSH authentication easily, since

traefikdoesn’t support TCP proxying yet. - The

docker_volumeresource in terraform is useless, since it doesn’t give you any control over the volume location on the host. (This might be a docker limitation.) - The

uploadblock inside adocker_containerresource is a great idea. Lets you push configuration straight inside a container. This is how I push configuration straight inside thetraefikcontainer for eg:upload { content = "${file("${path.module}/conf/traefik.toml")}" file = "/etc/traefik/traefik.toml" }

Advice

This section is if you’re venturing into a docker-heavy terraform setup:

- Use

traefik. Will save you a lot of pain with proxying requests. - Repeat the

portssection for every IP you want to listen on. CIDRs don’t work. - If you want to run the container on boot, you want the following:

restart = "unless-stopped" destroy_grace_seconds = 10 must_run = true - If you have a single

docker_registry_imageresource in your state, you can’t run terraform without internet access. - Breaking your docker module into

images.tf,volumes.tf, anddata.tf(for registry_images) works quite well. - Memory limits on docker containers can be too contrained. Keep an eye on logs to see if anything is getting killed.

- Setup Docker TLS auth first. I tried proxying Docker over apache with basic auth, but it didn’t work out well.

MongoDB with forceful server restarts

Since my server gets a forceful restart every few days due to power-cuts (I’m still working on a backup power supply), I faced some issues with MongoDB being unable to recover cleanly. The lockfile would indicate a ungraceful shutdown, and it would require manual repairs, which sometimes failed.

As a weird-hacky-fix, since most of the errors were from the MongoDB WiredTiger engine itself, I hypothesized that switching to a more robust engine might save me from these manual repairs. I switched to MongoRocks, and while it has stopped the issue with repairs, the wiki stil doesn’t like it, and I’m facing this issue: https://github.com/Requarks/wiki/issues/313

However, I don’t have to repair the DB manually, which is a win.

SSHD on specific Interface

My proxy server has the following

eth0 139.59.22.234

And an associated Anchor IP for static IP usecases via Digital Ocean. (10.47.0.5, doesn’t show up in ifconfig).

I wanted to run the following setup:

eth0:22->sshdAnchor-IP:22->simpleproxy->gitea:ssh

where gitea is the git server hosting git.captnemo.in. This way:

- I could SSH to the proxy server over 22

- And directly SSH to the Gitea server over 22 using a different IP address.

Unfortunately, sshd doesn’t allow you to listen on a specific interface, and since the eth0 IP is non-static I can’t rely on it.

As a result, I’ve resorted to just using 2 separate ports:

22 -> simpleproxy -> gitea:ssh

222 -> sshd

There are some hacky ways around this by creating a new service that boots SSHD after network connectivity, but I thought this was much more stable.

Wiki.js public pages

I’m using wiki.js setup at https://wiki.bb8.fun. A specific requirement I had was public pages, so that I could give links to people for specific resources that could be browser without a login.

However, I wanted the default to be authenticated, and only certain pages to be public. The config for this was surprisingly simple:

YAML config

You need to ensure that defaultReadAccess is false:

auth:

defaultReadAccess: false

local:

enabled: true

Guest Access

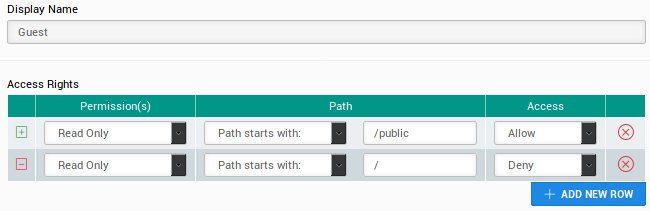

The following configuration is set for the guest user:

Now any pages created under the /public directory are now browseable by anyone.

Here is a sample page: https://wiki.bb8.fun/public/nebula

Docker CA Cert Authentication

I wrote a script that goes with the docker TLS guide to help you setup TLS authentication

OpenVPN default gateway client side configuration

I’m running a OpenVPN configuration on my proxy server. Howver, I don’t always want to use my VPN as the default route, only when I’m in an untrusted network. I still however, want to be able to connect to the VPN and use it to connect to other clients.

The solution is two-fold:

Server Side

Make sure you do not have the following in your OpenVPN server.conf:

push "redirect-gateway def1 bypass-dhcp"

Client Side

I created 2 copies of the VPN configuration files. Both of the them have identical config, except for this one line:

redirect-gateway def1

If I connect to the VPN config using this configuration, all my traffic is forwarded over the VPN. If you’re using Arch Linux, this is as simple as creating 2 config files:

/etc/openvpn/client/one.conf/etc/openvpn/client/two.conf

And running systemctl start openvpn-client@one. I’ve enabled my non-defaut-route VPN service, so it automatically connects to on boot.

If you’re interested in my self-hosting setup, I’m using Terraform + Docker, the code is hosted on the same server, and I’ve been writing about my experience and learnings:

- Part 1, Hardware

- Part 2, Terraform/Docker

- Part 3, Learnings

- Part 4, Migrating from Google (and more)

- Part 5, Home Server Networking

- Part 6, btrfs RAID device replacement

If you have any comments, reach out to me

Published on December 18, 2017