Home Server Networking ⚓

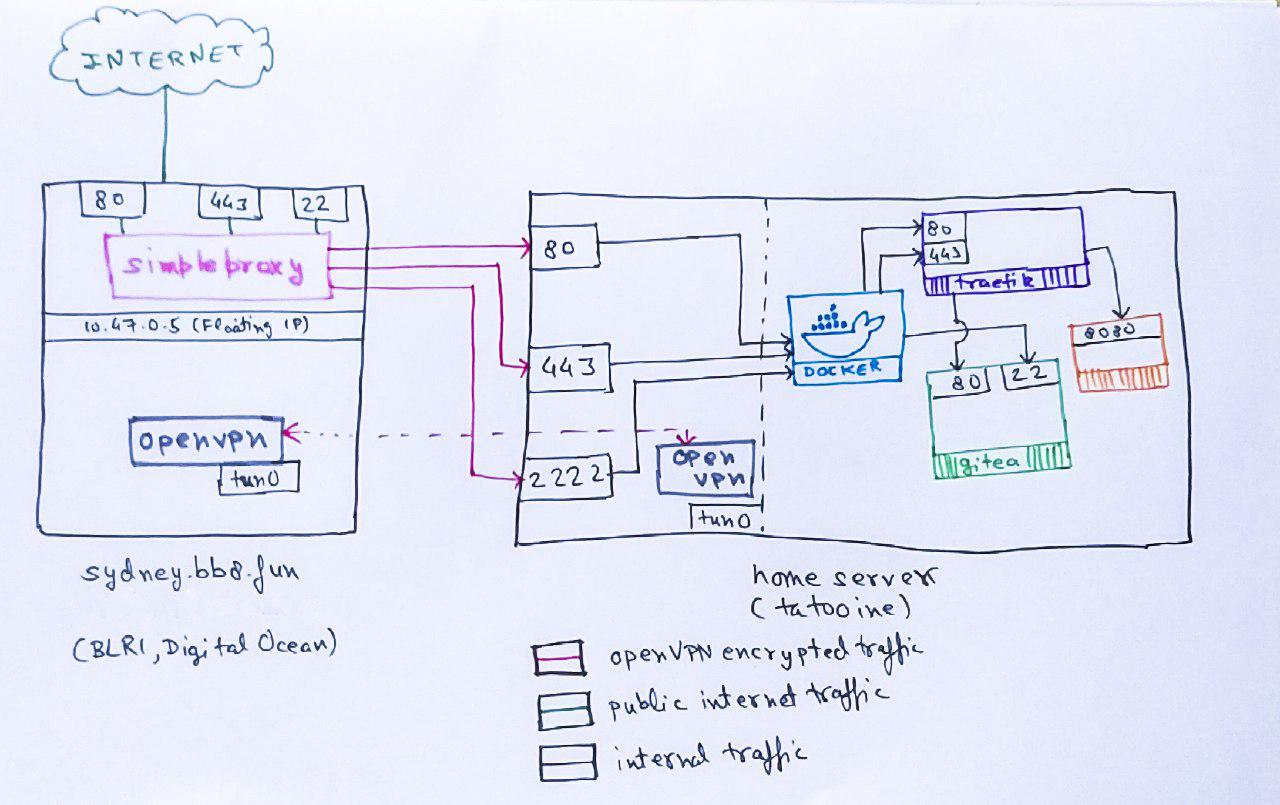

22 Apr 2018Next in the Home Server series, this post documents how I got the networking setup to serve content publicly from my under-the-tv server.

Background

My home server runs on a mix of Docker/Traefik orchestrated via Terraform. The source code is at https://git.captnemo.in/nemo/nebula (self-hosted, dogfooding FTW!) if you wanna take a look.

The ISP is ACT Bangalore1. They offer decent bandwidth and I’ve been a customer long time.

Public Static IP

In order to host content, you need a stable public IP. Unfortunately, ACT puts all of its customers in Bangalore behind a NAT 2. As a result, I decided to get a Floating IP from Digital Ocean 3.

The Static IP is attached to a cheap Digital Ocean Droplet (10$/mo). If you resolve bb8.fun, this is the IP you will get:

Name: bb8.fun

Address: 139.59.48.222

The droplet has a public static IP of it’s own as well: 139.59.22.234. The reason I picked a Floating IP is because DO gives them for free, and I can switch between instances later without worrying about it.

Floating IP

On the Digital Ocean infrastructure side, this IP is not directly attached to an interface on your droplet. Instead, DO uses something called “Anchor IP”:

Network traffic between a Floating IP and a Droplet flows through an anchor IP, which is an IP address aliased to the Droplet’s public network interface (eth0). You should bind any public services that you want to make highly available through a Floating IP to the anchor IP.

So, now my Droplet has 2 different IPs that I can use:

- Droplet Public IP (

139.59.22.234), assigned directly to theeth0interface. - Droplet Anchor IP (

10.47.0.5), setup as an alias to theeth0interface.

This doubles the number of services I can listen to. I could have (for eg) - 2 different webservers on both of these IPs.

OpenVPN

In order to establish NAT-punching connectivity between the Droplet and the Home Server, I run OpenVPN server

on the Droplet and openvpn-client on the homeserver.4

The Digital Ocean Guide is a great resource if you ever have to do this. 2 specific IPs on the OpenVPN network are marked as static:

- Droplet:

10.8.0.1 - Home Server:

10.8.0.14

Home Server - Networking

- The server has a private static IP assigned to its

eth0interface - It also has a private static IP assiged to its

tun0interface

There are primarily 3 kinds of services that I like to run:

- Accessible only from within the home network (Timemachine backups, for eg) (Internal). This I publish on the

eth0interface. - Accessible only from the public internet (Wiki) (Strictly Public). These I publish on the

tun0interface and proxy via the droplet. - Accessible from both places (Emby, AirSonic) (Public). These I pubish on both

tun0and theeth0interface on the homeserver.

Docker Networking Basics

Docker runs its own internal network for services, and lets you “publish” these services by forwarding traffic from a given interface to them.

In plain docker-cli, this would be:

docker run nginx --publish 443:443,80:80 (forward traffic on 443,80 on all interfaces to the container)

Since I use Terraform, it looks like the following for Traefik:

# Admin Backend

ports {

internal = 1111

external = 1111

ip = "${var.ips["eth0"]}"

}

ports {

internal = 1111

external = 1111

ip = "${var.ips["tun0"]}"

}

# Local Web Server

ports {

internal = 80

external = 80

ip = "${var.ips["eth0"]}"

}

# Local Web Server (HTTPS)

ports {

internal = 443

external = 443

ip = "${var.ips["eth0"]}"

}

# Proxied via sydney.captnemo.in

ports {

internal = 443

external = 443

ip = "${var.ips["tun0"]}"

}

ports {

internal = 80

external = 80

ip = "${var.ips["tun0"]}"

}

There are 3 “services” exposed by Traefik on 3 ports:

- Traefik Admin Interface

- Useful for debugging. I leave this in Read-Only mode with no authentication. This is an Internal service

- HTTP, Port 80

- This redirects users to the next entrypoint (HTTPS). This is a Public service.

- HTTPS, Port 443

- This is where most of the traefik flows. This is a Public service.

For all 3 of the above, Docker forwards traffic from both OpenVPN, as well as the home network. OpenVPN lets me access this from my laptop when I’m not at home, which is helpful for debugging issues. However, to keep the Admin Interface internal, it is not published to the internet.

Internet Access

The “bridge” between the Floating IP and the OpenVPN IP (both on the Digital Ocean droplet) is simpleproxy. It is a barely-maintained 200 line TCP-proxy. I picked it up because of its ease of use as a TCP Proxy. I specifically looked for a TCP Proxy because:

- I did not want to terminate SSL on Digital Ocean, since Traefik was already doing LetsEncrypt cert management for me

- I also wanted to proxy non-web services (more below).

The simpleproxy configuration consists of a few systemd units:

[Service]

Type=simple

WorkingDirectory=/tmp

# Forward Anchor IP 80 -> Home Server VPN 80

ExecStart=/usr/bin/simpleproxy -L 10.47.0.5:80 -R 10.8.0.14:80

Restart=on-abort

[Install]

WantedBy=multi-user.target

[Unit]

Description=Simple Proxy

After=network.target

I run 3 of these: 2 for HTTP/HTTPS, and another one for SSH.

While I use simpleproxy for its stability and simplicity, you could also use iptables to achieve the same result.

SSH Tunelling

When I’m on the go, there are 3 different SSH services I might need:

- Digital Ocean Droplet

- Home Server

- Git (

gitearuns its own internal git server)

My initial plan was:

- Forward Port 22 Floating IP Traffic to Gitea.

- Use the

eth0interface on the droplet to run the dropletsshdservice. - Keep the Home Server SSH forwarded to OpenVPN, so I can access it over the VPN network.

Unfortunately, that didn’t work out well, because sshd doesn’t support listening on an Interface. I could have used the Public Droplet IP, but I didn’t like the idea.

The current setup instead involves:

- Running the droplet

sshdon a separate port entirely (2222). - The

simpleproxyservice forwarding port 22 traefik to 2222 on OpenVPN IP which is then published by Docker to thegiteacontainer’s port 22.

The complete traefik configuration is also available if you wanna look at the entrypoints in detail.

Caveats

Traefik Public Access

You might have noticed that because traefik is listening on both eth0 and tun0, there is no guarantee of a “strictly internal” service via Traefik. Traefik just uses the Host headers in the request (or SNI) to determine the container to which it needs to forward the request. I use *.in.bb8.fun for internaly accessible services, and *.bb8.fun for public. But if someone decides to spoof the headers, they can access the Internal service.

Since I’m aware of the risk, I do not publish anything via traefik that I’m not comfortable putting on the internet. Only a couple of services are marked as “internal-also”, and are published on both. Services like Prometheus are not published via Traefik.

2 Servers

Running and managing 2 servers takes a bit more effort, and has more moving parts. But I use the droplet for other tasks as well (running my DNSCrypt Server, for eg).

Original IP Address

Since SimpleProxy does not support the Proxy Protocol, both Traefik and Gitea/SSH servers don’t get informed about the original IP Address. I plan to fix that by switching to HAProxy TCP-mode.

If you’re interested in my self-hosting setup, I’m using Terraform + Docker, the code is hosted on the same server, and I’ve been writing about my experience and learnings:

- Part 1, Hardware

- Part 2, Terraform/Docker

- Part 3, Learnings

- Part 4, Migrating from Google (and more)

- Part 5, Home Server Networking

- Part 6, btrfs RAID device replacement

If you have any comments, reach out to me

-

If you get lucky with their customer support, some of the folks I know have a static public IP on their home setup. In my case, they asked me to upgrade to a corporate plan. ↩

-

I once scanned their entire network using

masscan. It was fun: https://medium.com/@captn3m0/i-scanned-all-of-act-bangalore-customers-and-the-results-arent-surprising-fecf9d7fe775 ↩ -

AWS calls its “permanent” IP addresses “Elastic” and Digital Ocean calls them “Floating”. We really need better names in this industry. ↩

-

Migrating to Wireguard is on my list, but I haven’t found any good documentation on running a hub-spoke network so far. ↩

Published on April 22, 2018